Two weeks ago I wrote about Parallel Similarity Transformation, which is useful iterative method for finding maximum eigen value of positive square matrices, along with respective eigen vector such that following equality is satisfied. I used SYCL DPC++ for implementing kernels, but in last weeks I discovered ways to improve upon my previous work. So today I decided to write about how I optimize similarity transformation kernels and present you with updated benchmark results.

I'll just pick each kernel required for computing maximum eigen value of positive square matrix and figure out ways how it can be improved in step-by-step manner. Following five kernels are required for performing parallel similarity transformation.

- kernelSumAcrossRows

- kernelMaxInVector

- kernelComputeEigenVector

- kernelSimilarityTransform

- kernelStopCriteria

I'll start with first one. All this kernel should do is simply compute sum of values stored in cells along each row of matrix. AN x N being a square matrix, it needs to output to a N-element vector where ith cell should hold sum of ith row of matrix. I want to perform N-many parallel summations --- as simple as that. With this requirement in mind, I'll write following kernel.

A high-level look at this kernel reveals it's not good idea to atomically compute sum of all elements along each row of AN x N. N-many memory locations of result vector of length N, are being operated on atomically to avoid any chance of data race. Each cell of result vector of length N, will be accessed N-many times by N-many designated work-items. This results into N2 global memory access, which is expensive. I'd like to reduce global memory access as much as possible. One simple way to do this in current context is to make use of reduction function of SYCL DPC++, which will help me in computing sum of all elements held by all work-items of subgroup. Using this subgroup method maps well to underlying SIMD instructions, which reduces communication cost, but does compute sum of all values held by subgroup participants. Finally I'll use atomic write to update sum in designated row of result vector, living in global memory, helping me to reduce global memory access to N2 / B, given that B = subgroup width. I'll express this with following kernel.

This kernel is indeed better than previous matrix row sum kernel, where N2 global memory accesses were performed. But I see one more way to attempt further optimization. I want to make use of faster local memory so that subgroup leader can atomically update respective work-group's sum, stored in designated local memory. It's possible to have multiple subgroups in a work-group, that way I'm able to reduce global memory access by another factor. Now only subgroup leaders are accessing local memory to update respective work-group's row sum, with their own subgroup level row sum. Finally after all work-items of this work-group are done, only work-group leader will access designated cell of global memory to atomically update respective row sum. With this update kernel should only access global memory N2 / B times, when B = work-group size, which should preferrably be greater than subgroup size. My final update to matrix row sum kernel looks like below.

Now it's good time to run these three kernels on both CPU and GPU, with various matrix dimension, to see how they perform. Following section shows result of running three variants of row sum kernel on CPU.

I see for random square matrix of dimension N x N, second variant of row summation kernel performs best. I expected third variant to perform at least as same as second one, if not better, though it seems second one is ~2x faster compared to last kernel. I compiled these kernels Ahead of Time, so that runtime kernel compilation time can be saved and launch on accelerator will be faster. As I've access to GPU, I've also ran it there and following are benchmark results I obtained.

Naive implementation i.e. first variant is performing much poor on GPU, but that's what I expected as all work-items are attempting to update global memory atomically, congestion should be high. I see this time third variant shines ! As I understand, in GPU it has dedicated local memory, which provides faster access compared to global memory. That's why third variant, where I make use of local memory to reduce global memory access and only work-group leaders access global memory one time to atomically update total row sum, performs better than others --- almost 2x faster than second variant, its closest competitor.

Now I'd like to spend some time in explaining how I'd attempt to write second kernel i.e. kernelMaxInVector. Business logic of this kernel should just find out maximum value from a given vector of size N. A naive way that comes to my mind first, is using atomic maximum value finder, where each work-item ( respresenting each cell of vector ) attempts to atomically compare its holding with some known global memory location, where finally maximum value is supposed to be stored. As my interest is finding out maximum value of vector, I'll have to initialize result memory location with 0. I'll write following kernel, depicting my naive attempt.

A next step that comes to my mind, for improving max value in vector finder kernel, is using SYCL DPC++ reduction kernel

for finding maximum value among all work-items of certain subgroup. This results in better mapping to underlying SIMD instructions.

Finally only subgroup leader will attempt to compare subgroup's maximum value with result in global memory location, which naturally

results into reduced access of global memory. In previous kernel, all work-items would have to compare their holding

with target in global memory, which results into N-many global memory accesses. But with this improvement, global memory access should

be reduced to N / B, when N = vector size, B = subgroup size.

Following is my attempt in writing this improved variant of kernelMaxInVector.

As I understand, second variant of max in vector kernel should perform better than first variant, but I've another idea

which I'd like to implement and benchmark. In quest of finding a way in reducing global memory access as much as possible,

I bring in idea of using local memory, where all subgroups of certain work-group help in computing maximum value of work-group

itself. And finally when all work-items of that work-group are done ( read reaches a synchronization point ), only work-group leader will attempt to access

global memory and perform atomic comparison to decide whether updation to result can be performed or not. This kernel

should reduce global memory access to N / B, when N = vector size, B = work-group size. Note, this technique will only

prove fruitful if work-group size is larger than subgroup size, specifally I'll set work-group such that it's evenly

divisible by subgroup size, which I'll specify using attribute understandable by dpcpp compiler.

Following is the attribute required for setting subgroup size for some kernel.

My final attempt in improving vector max value finder kernel is as below.

This is a time to benchmark three implementations for finding maximum value in vector. AOT compiled kernels on CPU perform as below.

I notice first variant, where all work-items are atomically comparing self value with global memory's

target value, doesn't perform that bad on CPU. It may be due to that CPU has better atomic operation support.

The final variant, which I expected to do better, is probably sufferring from the fact that on CPU, there's no

explicit local memory. Making subgroup leaders to write to local memory first, for finding work-group local maximum value

and then only work-group leader contributing in finding maximum value of vector by accessing/ comparing global memory ---

is incurring more cost than benefit, on CPU.

In below table, I present you with benchmark results I obtain after running same kernels on GPU. As GPU is less capable

in executing atomic operations, compared to CPU, I see first variant performing much worse on GPU. In second variant, subgroup's

work-items find maximum value using SYCL DPC++ reduction function, which is performing lot better as I expected. In this case,

contention should also be lower, as only subgroup leaders are supposed to be atomically accessing global memory. The final

variant, where I introduced local memory, so that I can reduce scope of global memory access by another factor, letting only

workgroup leaders atomically access global memory, performs almost as same as second variant. May be third technique doesn't bring

much on table, but it's another sound & efficient way to write this kernel.

Next kernel in my list, kernelComputeEigenVector, is very simple to implement, where each element of result vector is computed using following formula.

This problem itself is very parallel, no data dependency, so I'll write following kernel for parallelly computing result eigen vector from row sum vector and max value in row sum vector.

Notice, value stored in max_val memory location is in global memory, which is accessed N-many times during execution of this kernel. But this value is somewhat constant in this context, so I'd like to use some technique which will help me in reading this value from global memory only once. After that read value will be distributed among a set of work-items, not requiring each of them to read from global memory. This will result into reduced global memory access, which contributes in making whole compute pipeline faster. The set of work-items which will read from global memory only once, is called subgroup. As subgroups map well to underlying SIMD intrinsics, resulting code running on accelerator should perform better. My take at modifying this kernel is as simple as adding group_broadcast method when reading from memory location held behind max_val.

Benchmarking last two kernels, used for computing eigen vector, on CPU gives me following result. It looks both kernel variants perform almost same on CPU and my speculation of resulting into better SIMD usage in case of second variant of kernel, doesn't seem to be true.

Benchmarking on GPU, I see similar trend that both variants are performing almost same. I believe this is due to either I'm using group_broadcast improperly or it's not working as expected.

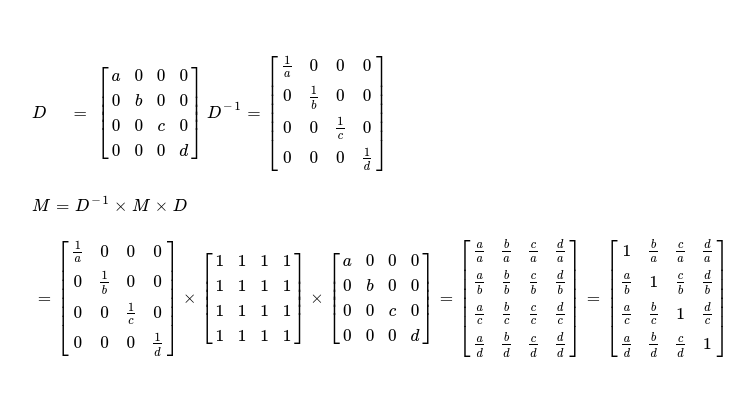

Next kernel I'll write is kernelSimilarityTransform, which is an important part in compute pipeline of whole Similarity Transformation method. This kernel itself helps in computing next iteration's matrix using following technique. I've already found row sum vector of a matrix, which I'll use in this kernel. Assume I've one vector of length N, forming one diagonal matrix and its respective inverse matrix is trivial.

Next iteration's matrix is computed as below.

This is a matrix multiplication problem, but two of three operands being diagonal matrices and two matrices being inverse of one another, I can reduce matrix multiplication problem to a reduced variant, as I derive it in above diagram. Writing a parallel implementation of this is pretty easy.

This kernel makes use of local memory to cache diagonal matrix's ( read row-sum vector ) cells, reducing global memory access. Benchmarking this kernel on CPU, GPU compares as below. As GPUs possess explicit local memory, benefits of using local memory is clearly visible on second column in following diagram.

Final kernel in pipeline i.e. kernelStopCriteria, examines row-sum vector and decides whether we should go to next iteration or not. When iterative method converges to result, all values in row-sum vector should be very close and absolute difference between a pair of consequtive values should be lesser than some predefined epsilon value --- error in floating point computation that I'm okay to live with. Let us take an example vector to demonstrate how I can write one parallel kernel for same.

For a vector of length N, I'll kick off N work-items where ith work-item accesses row-sum vector's ith and (i+1)th indices, for finding absolute difference between a pair of consequtive cells. Notice for work-item with index N-1, attempting to access global memory with index (i+1) = N should result into segmentation fault. I should be accessing index i and (i+1) % N of row-sum vector from each work-item. Implementing such a kernel is as easy as below.

This kernel is pretty simple, except all_of function, which is a SYCL group algorithm function, helps in evaluation of a boolean

predicate across all work-items of a work(/ sub)-group. The main problem I see with above kernel is duplicate access of global memory ( i.e. row-sum vector ).

Each cell of row-sum vector is accessed twice. For reducing global memory access, I've to resort to subgroup shuffling algorithm, so that within a subgroup

all work-items just read once from global memory, but then communicate among themselves, sharing their register cached values with other work-items in same

subgroup. I use one specific subgroup function --- shuffle_down, which helps me in obtaining ((i + 1) % B)th work-item's value

inside ith work-item, without explicitly accessing global memory. For sake of better demonstration purpose, let me take an example.

Say I've following vector, which I want to shuffle down, then assertion in following pseudocode block must hold.

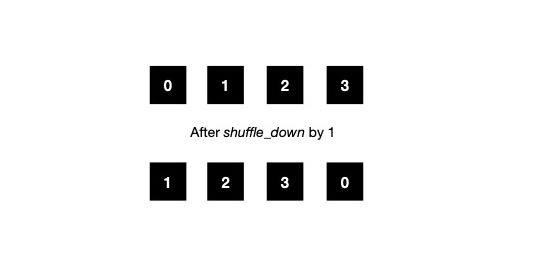

Pictorially it looks like below, when subgroup size is 4. If each of 4 work-items start with their initial value in first row, after shuffle_down by 1 place, their local register content should be updated to last row. This is somewhat similar to what I want to attain. I say somewhat because, this model doesn't fully fit my requirement when vector size N itself is greater than subgroup size, say B.

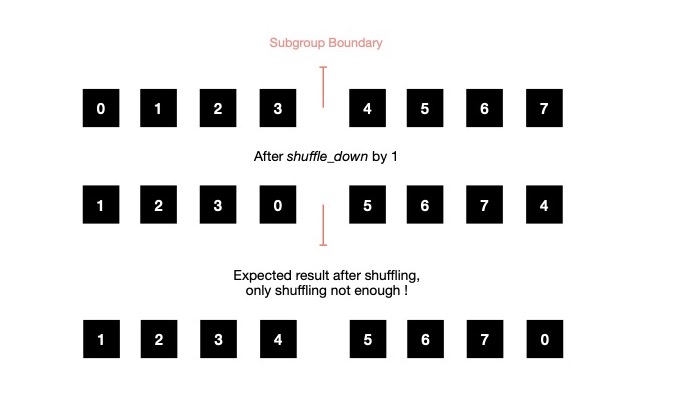

Check following scenario, where subgroup size is 4, but vector which is being shuffled down is of length 8. As shuffle_down method itself wraps around at subgroup boundary, produced result will look like below. But my expected result is something where index wrapping should happen at boundary of whole vector under operation.

As subgroup shuffling itself is not enough to satisfy requirement for checking stopping criteria, in parallel, I use conditional check based reading from global memory, though this results into duplicate reads from global memory at subgroup boundary. Then rest of the flow is same.

I can introduce local memory here, following same rationale as I did in some of previous kernels. Instead of letting all subgroup leaders atomically accessing global memory for updating status of whether convergence criteria has been satisfied in respective subgroup, I can just let work-group leaders do that part. In this updated setup, subgroup leaders update flag in local memory, where it's decided whether convergence criteria has been reached in work-group. And when all work-items of work-group reach one barrier ( synchronization point ), work-group leader will update work-group's result in global memory. Comparing benchmark of final variant of convergence criteria checker kernel shows much better performance on GPU.

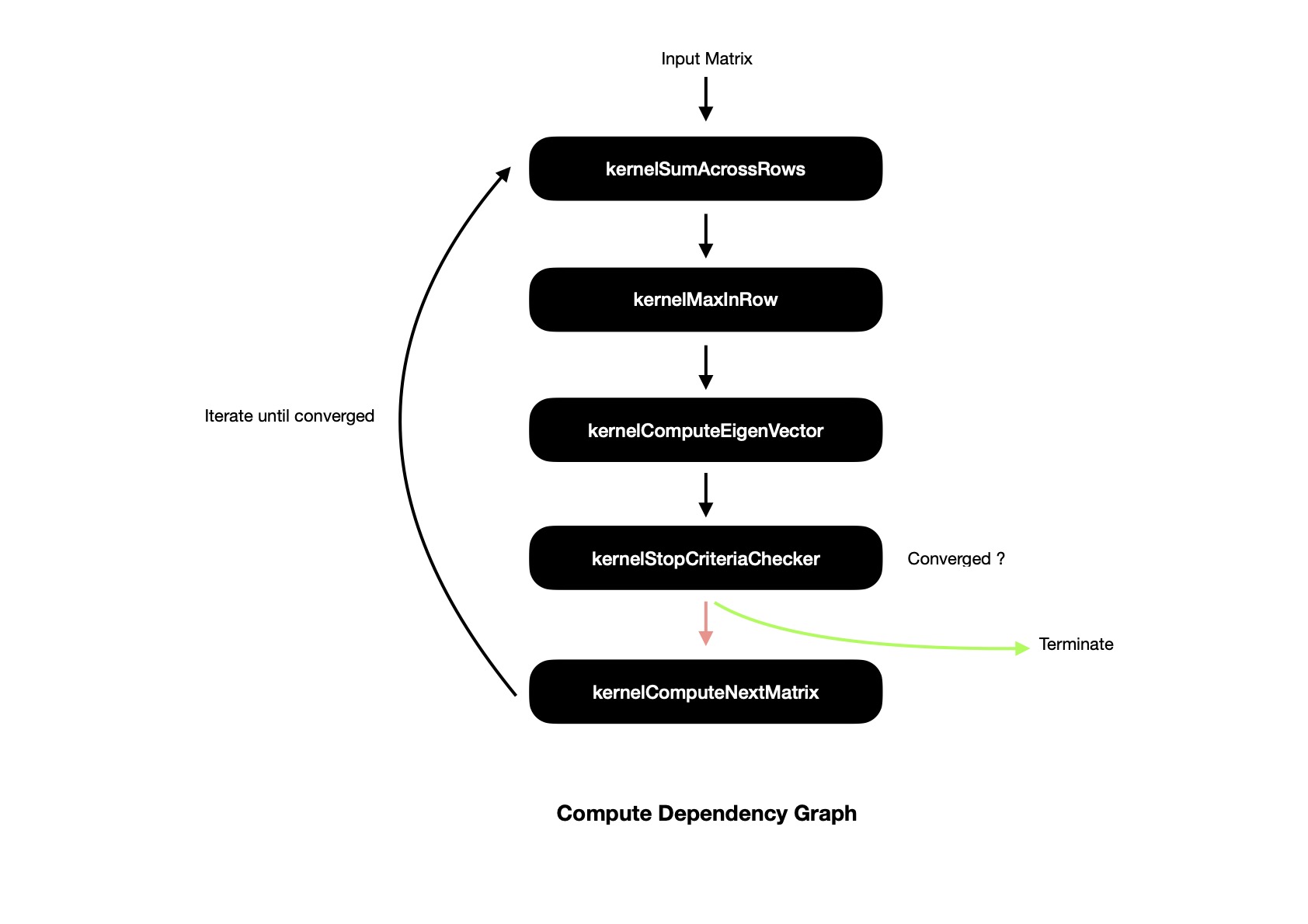

Empowered with all these (optimized) kernels, it's time to present whole compute pipeline with inter-kernel dependency. I use SYCL buffers for representing/ passing around data, which helps SYCL runtime to figure out computational dependency graph in terms of DAG.

Now I'd like to present final benchmark of whole compute pipeline of Parallel Similarity Transformation, depicting performance on both CPU, GPU. I run benchmark suite with N x N hilbert matrix, finding maximum eigen value and respective eigen vector. Last column in following table shows how many iterations were required before converging to maximum eigen value. For matrices of large dimension performance on GPU is better, because all component kernels perform better on GPU, making whole compute pipeline faster.

I keep parallel similarity transform method's full implementation here for future reference. In this post, I attempted to show how I'd go about writing parallel kernels and eventually optimize them. Major points I try to focus during optimization phase.

- Can global memory access be reduced ?

- Is it possible to use subgroup functions ?

- Is it possible to write local memory cached/ blocked variant ?

If you find out some mistake/ possible (yet undiscovered) way to improve some kernel, consider dropping me a line with that.

Have a great time !