This week I was trying to implement Matrix Multiplication, on GPGPU using Vulkan Compute API, which is pretty easy to parallelize, given that each cell of resulting matrix can be computed independent of any other cell. This is a simple data parallel problem, where same kernel can be run for each cell of resulting matrix without any data dependency, while input is read from two immutible buffers ( read Matrix A, B ). For understanding performance gain when run on GPGPU, I planned to implement naive matrix multiplication algorithm, which runs with time complexity O(N ** 2), in both CPU & GPU executable form. Given that GPUs are massively parallel machines, multiple cells of resulting matrix can be computed at a time, while paying very low synchronization cost, given nature of problem at hand.

When computing matrix multiplication on CPU, I make use of two nested loops, where each iteration of inner most loop computes value at a certain cell of resulting matrix with formula

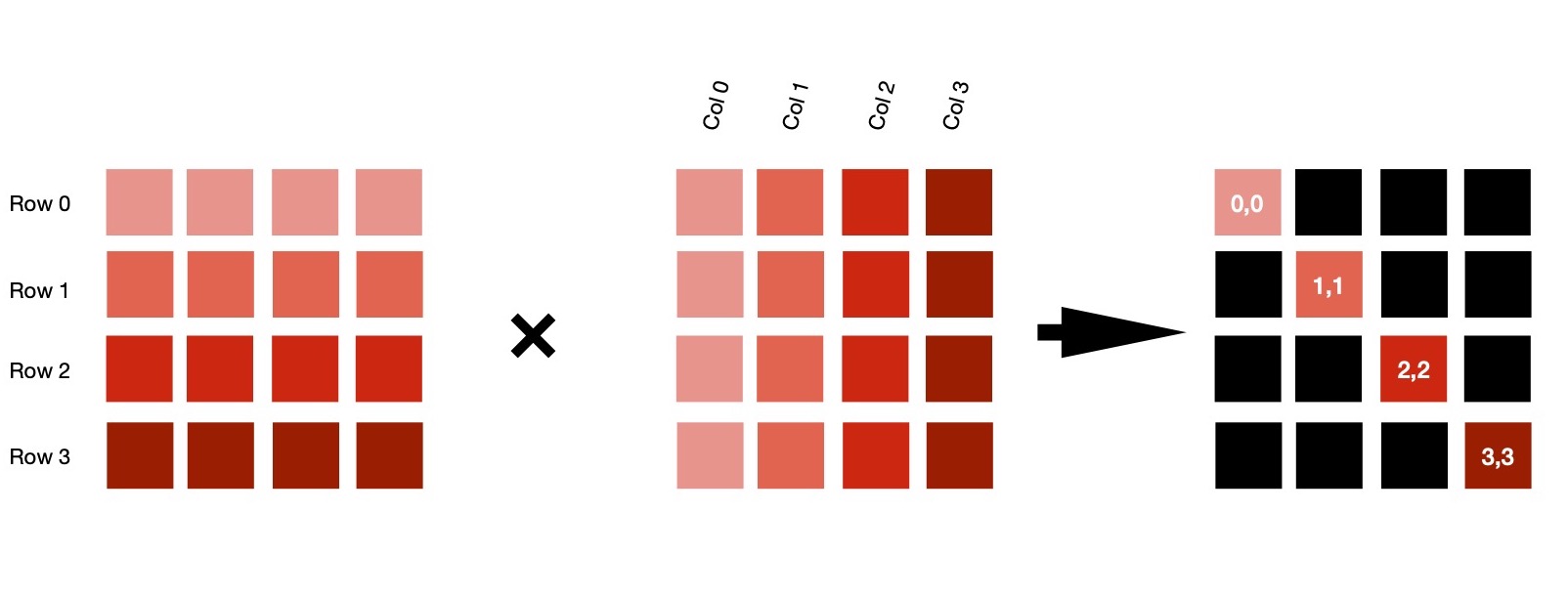

If I work with two 4 x 4 matrices, multiplication algorithm looks like 👇. For making it more generic, first LHS matrix's #-of columns & RHS matrix's #-of rows need to be equal. Otherwise, multiplication is not possible. Two nested loops iterate in such a way so that it's possible to generate indices of each cell of resulting matrix of dimension M x P, when LHS(M x N) & RHS(N x P).

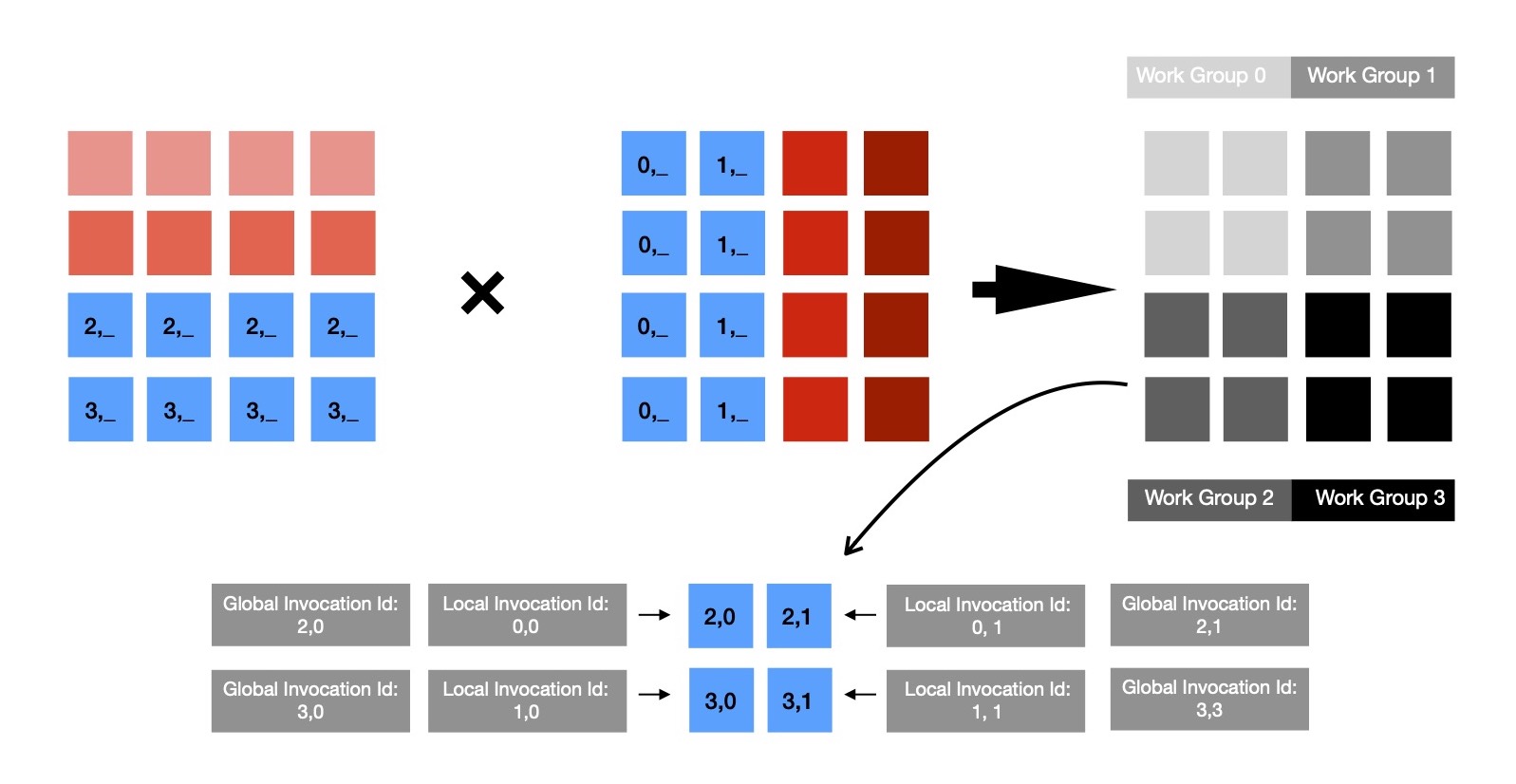

It's clearly understandable that each cell of resulting matrix C can be computed independently --- easily parallelizable. Now each invocation of compute shader ( read kernel running on GPU ) computes single cell of resulting matrix & exits; as many of these invocations can be run by GPU at same time, speed up is quite noticeable.

During each invocation of compute shader, following code snippet is run, which reads input from LHS's rowi & RHS's columnj; computes result & finally writes computed value for cell (i, j) at respective memory location. Notice, how one dimensional array being used for respresenting/ accessing two dimensional matrix.

Finally, it's time to run both CPU, GPU executable matrix multiplication implementation. Speed up obtained is noticeably large

due to nature of problem & SIMT execution model of GPUs. As I've used an old integrated graphics card, I believe executing same implementation

on discrete compute enabled graphics unit with subgroup size > 32, should result in much better performance. In my case, ~32 invocations execute

in parallel, if subgroup size > 32, no doubt speed up will be visibly higher.

I keep whole implementation here for future reference.

In coming days, I'll keep exploring Vulkan Compute more deeply, while implementing GPU executable parallel algorithms.