After exploring Random Linear Network Coding for some time, I've started writing one library in Golang, named kodr, where I'm on my way to implementing various RLNC schemes i.e. Full RLNC, Systematic RLNC, Sparse RLNC, Generational RLNC, Sliding Window RLNC, Perpetual RLNC etc. . This week I was thinking RLNC can be good fit for reliable, resilient storage systems where data chunks are already stored in erasure coded form in multiple geographically distant locations --- increasing redundancy, so that even if some of these locations become unreachable temporarily or permanently, original data can be recovered from available subset of data. A lot of these storage systems are using Reed Solomon Coding or some ( proprietary ) variant of it, but as I understand most of those coding schemes don't allow recoding as RLNC does. Good thing about RLNC is it can recode and construct newly coded pieces just from available coded pieces. It means for recoding RLNC decoder doesn't need to collect N-many linearly independent pieces & then decode them back to original N-many pieces, which are coded together again to generate "recoded" pieces, rather it takes M-many ( M <= N ) coded pieces ( read linear equations ) which are converted to new coded pieces ( read new linear equations ) by randomly drawing coding coefficients from finite field GF(2**8). And these RLNC (re)coded pieces can be equally useful to decoder when constructing back original pieces, as long as they're linearly independent. Recoding is beneficial when some storage locations of a cluster become temporarily or permanently unreachable, new redundancy in cluster can be created pretty fast by collecting some available coded pieces & generating new coded pieces from those. Today I'm interested in demonstrating same using a simulation I design using kodr.

Assuming I've a multimedia file of N-bytes ( where N = 16265760 ), which I'd like to store in a reliable storage cluster & get whole file back when need it. To be more specific it's a MP4 coded video file with unique content identifier dfc734fc40a98e8a6e041af13893f6170f073b160dfdc16621e76ad4e6b847b4. The storage cluster is reliable because it can handle failures of few of its components, upto certain threshold & still return me back my file. No doubt cluster creates redundancy in data it stores, which effectively increases storage cost. Simply storage cluster can replicate original file into three locations, making it able to handle upto 2 locations failing & it's still able to give me back file when I need it. Or cluster can decide to store file in erasure coded form by constructing pieces ( read smaller byte chunks ) & distributing them over multiple locations. When I ask it for whole file it collects required pieces from available locations & reconstructs whole file for me by solving some linear equations.

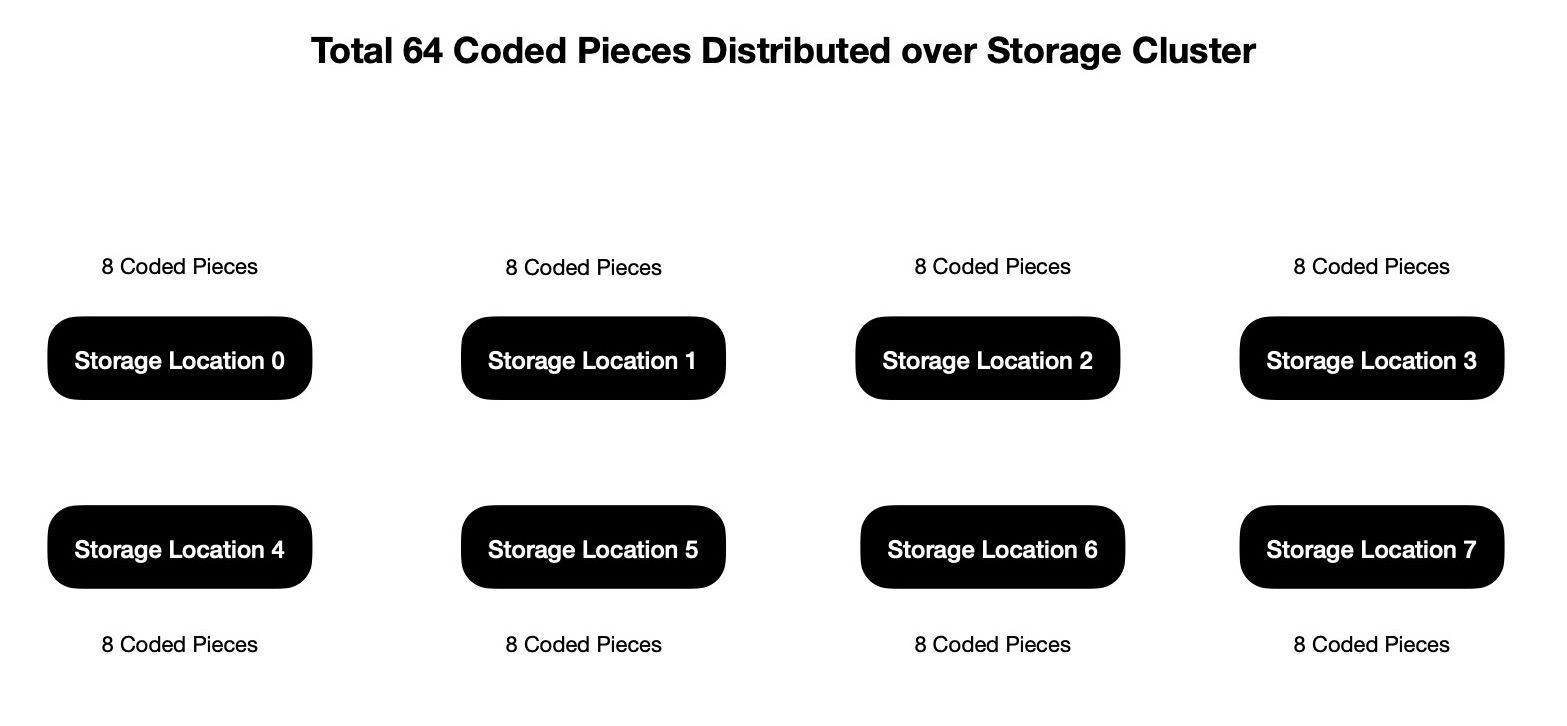

For coding N-bytes file, I need to specify how many pieces I want it to be chunked into. Say I want the file to be splitted into 32 pieces & code them together to construct 64 coded pieces, which cluster distributes

through out network. Generation size I choose to use is 32 & I decide to work with only one generation for this simulation.

Cluster employs 8 storage devices which are physically seperated from each other for storing 64 coded pieces I constructed --- each storage device storing 8 pieces. For constructing coded

pieces I make use of kodr, where I give it whole file i.e. a byte array of size 16_265_760 & express my intent of splitting it into 32 pieces.

As total file can be divided into 32 pieces of equal size ( i.e. each of 508_305 bytes ), no padding bytes are required to be added at end of byte array. If it was not the case, B-many bytes are to be added at end

of original byte slice of length N, so that (N+B)-bytes slice becomes properly divisible by piece count ( here it's 32 ). As 32 original pieces are being coded together, it results into carrying 32 more bytes in each coded

piece, which is called random coding coefficient vector, where each element is drawn from GF(2**8). GF(2**8) has 256 elements, each of them can be easily represented in 1 byte = 8 bits. So each coded piece carries

coded data of 508_305 bytes & 32 random coding coefficients. Another way to see coding vectors --- these are carrying information of how 32 original pieces are coded together so that I can exploit them during decoding

back to original pieces. This results into coded piece of size 508_337 bytes ( = 508_305 + 32 ).

I'm going to generate 64 coded pieces, among those any linearly independent 32 of them are enough to construct back original file --- I need to collect & process 16_266_784 bytes coded data ( in total )

to get back 16_265_760 bytes of original file, which is 1_024 bytes overhead.

Each coded piece is of 508_337 bytes, so for storing 64 coded pieces cluster network needs to employ 325_335_68 bytes storage. As I'm working with GF(2**8) which has 256 elements, the chance of generating linearly dependent pieces are pretty low, so most of these 64 coded pieces, spread across network are going to be linearly independent. For simulating that I'm randomly collecting total 32 coded pieces, I decide to random shuffle set of available 64 coded pieces & choose first 32 pieces.

After performing full RLNC decoding with 32 coded pieces, I expect to get back 32 original pieces. Turns out 32 collected coded pieces were linearly independent --- notice during encoding I just randomly chose

32 finite field elements from GF(2**8), for constructing a coding coefficient vector, meaning random coefficient choice performs pretty good.

Computing SHA256 hash on reconstructed data must give me dfc734fc40a98e8a6e041af13893f6170f073b160dfdc16621e76ad4e6b847b4. And indeed that happens ---

confirming data reconstruction was successful.

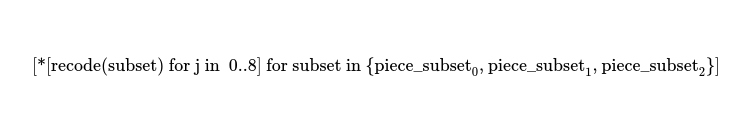

Now I'd like to simulate recoding & piece repopulation behaviour in face of partial network failure. Assuming Storage Location {1, 4, 6} have become permanently unavailable & cluster would like to get same level of resiliency back --- so it decides to generate 24 coded pieces ( those many have become unavailable ) from available 40 pieces spread across 5 available storage locations. Cluster participants can start producing new coded pieces from 8 coded pieces they've locally & collaboratively make total 24 coded pieces, which are now sent to 3 new storage locations, replacing unavailable ones. Let me take an imaginary scenario for simulation purpose, where 40 available coded pieces are collected & splitted into 3 equal size ( = 20 ) overlapping subsets.

Using subset_0's 20 pieces, I recode 8 new coded pieces. Similarly I generate 16 more coded pieces by recoding pieces from subset_{1, 2}. I've now 24 newly coded pieces, which are distributed

to 3 newly available locations, replacing Storage Location {1, 4, 6} --- cluster has again same level of resiliency.

Now I can decide to randomly collect any 32 linearly independent coded pieces & attempt to reconstruct back original video file. And generated flattened data has same

SHA256 hash 0xdfc734fc40a98e8a6e041af13893f6170f073b160dfdc16621e76ad4e6b847b4.

One thing to notice, ignoring hypothetical recoding scenario where 40 available coded pieces are splitted into 3 overlapping subsets of pieces of equal size ( = 20 ),

in real world cluster doesn't even need to ask all available 5 storage locations to collaboratively generate 24 pieces to fill up gap. Say cluster asks ( read master of cluster asking )

only one participant to generate 8 new coded pieces from 8 locally available coded pieces. Similarly cluster asks 2 more available participants to generate 16 ( = 8 + 8 ) new coded

pieces from respectively their locally available 8 pieces. Now network has 24 new coded pieces, which are just required to be sent over to new 3 storage locations, acting as replacement.

So not much of corrdination is required among peers for handling repopulation scenario.

Even it can be made simpler, say cluster wants to fill up gap of 24 missing coded pieces, it sends a message to network participants asking if someone having bandwidth ( read not busy doing other more important work )

would be interested in voluntarily constructing 24 coded pieces from 8 locally available pieces. If someone responds it will do so & help in sending those 24 new pieces to 3 newly available

storage locations --- cluster back to previous resiliency level.

This means, reconstruction-and-repopulation is not very expensive & it can be performed without much coordination --- all participants don't need to be involved in this business, only those

parties having lesser work at that moment, can join & help. It's not the case for Reed Solomon coded storage clusters where each new scenario like piece repopulation

needs to be handled by involving many more peers than required in RLNC based clusters.

One thing I'd like to mention, each coded piece is of size 508_337 bytes, which has two components.

- Random Coding Vector - 32 bytes

- Coded Data - 508_305 bytes

Rather than storing extra 32 bytes of coding vector, one 8/ 16 bytes seed can be stored for each coded piece, decreasing total storage requirement for 64 coded pieces in network. This 8/ 16 bytes seed can be used to generate 32 coding coefficients which will be treated like coding vector while recoding/ decoding. If 8 bytes seed is used for initialising pseudo random number generator, it saves 1536 bytes network wide for storing 64 coded pieces. In quite same way, with 16 bytes seed, 1024 bytes network wide saving can be attained.

I clearly understand, RLNC based storage clusters will shine. I maintain accompanying kodr-based simulation here.

As I keep learning new RLNC based techniques, I plan to implement those in my library kodr alongside.

Also I'd love to learn about your opinion around idea of using RLNC in decentralised storage systems.

Have a great time !